@patrick, thanks!

Unfortunately, I do not have enough skills to make the custome module from this code. You will have to write data to PostgreSQL in a loop and wait for 10-20 hours. In any case, it will be faster than if I spend a few more weeks on this problem.

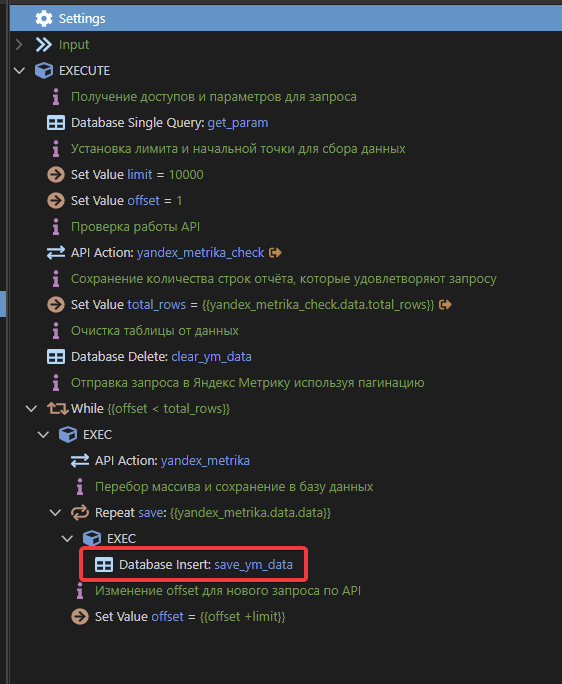

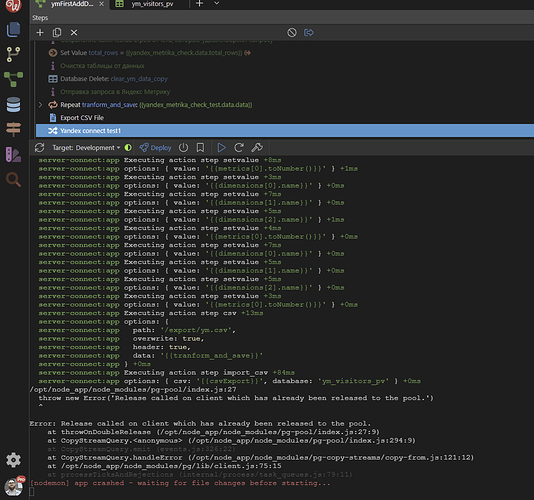

I have created a custom module. But it gives an error. Can you please tell me what could be the problem?

JS file

const ym = require('ym');

var fs = require('fs')

var { Pool } = require('pg')

var copyFrom = require('pg-copy-streams').from

var pool = new Pool()

export.ym = pool.connect(function (err, client, done) {

var stream = client.query(copyFrom('COPY ym.database(options.database) FROM STDIN'))

var fileStream = fs.createReadStream('ym.csv(options.csv)')

fileStream.on('error', done)

stream.on('error', done)

stream.on('finish', done)

fileStream.pipe(stream)

})

HJSON file

{

type: 'custom',

module : 'custom',

action : 'yandex metrika',

groupTitle : 'Import data',

groupIcon : 'fas fa-lg fa-random comp-data',

title : 'Yandex connect @@var(actionName)@@',

icon : 'fas fa-lg fa-random comp-data',

dataScheme: [],

dataPickObject: true,

properties : [

{

group: 'Properties',

variables: [

{

name: 'actionName',

optionName: 'name',

title: 'Name',

type: 'text',

required: true,

defaultValue: ''

},

{

name: 'csv',

optionName: 'csv',

title: 'CSV',

type: 'text',

required: true,

serverDataBindings: true,

defaultValue: ''

},

{

name: 'database',

optionName: 'database',

title: 'Database',

type: 'text',

required: true,

serverDataBindings: true,

defaultValue: ''

},

{

name: 'actionOutput',

optionName: 'output',

title: 'Output',

type: 'boolean',

defaultValue: false

}

]

}

]

}

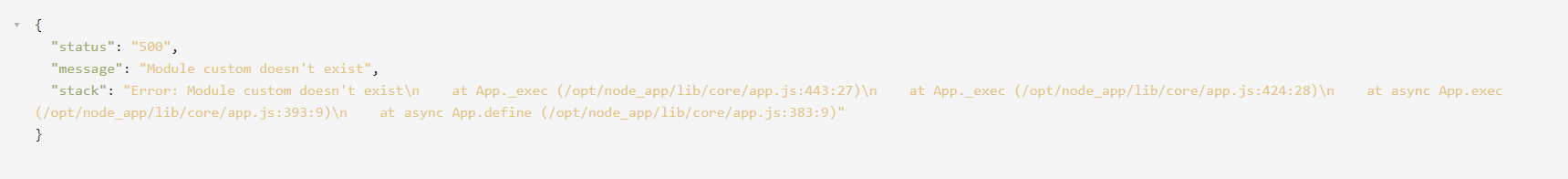

Error

Make sure your .js file matches the module parameter in the hjson file…in your case custom.js.

Also, try the format from the examples:

exports.setvalue= function(options, name) {

return this.parse(options.value);

};

You can also use async function…

And your action from the hjson needs to match the module. Yours don’t match: yandex metrika vs ym.

Module name in the hjson and the js filename should be the same like @mebeingken already mentions. The action name is the exported function. For exporting you need to use exports.{name} = function(options) { /* your code */ }.

Below a sample based on your code. I don’t know what the ym module is for, so I left it out of my sample, change it where needed.

// dataimport.js

exports.import_csv = function(options) {

const fs = require('fs')

const { Pool } = require('pg')

const copyFrom = require('pg-copy-streams').from

const pool = new Pool()

pool.connect(function(err, client, done) {

var stream = client.query(copyFrom(`COPY ${options.database} FROM STDIN`))

var fileStream = fs.createReadStream(options.csv)

fileStream.on('error', done)

stream.on('error', done)

stream.on('finish', done)

fileStream.pipe(stream)

})

}

// dataimport.hjson

{

type: 'custom',

module : 'dataimport',

action : 'import_csv',

groupTitle : 'Import data',

groupIcon : 'fas fa-lg fa-random comp-data',

title : 'Yandex connect @@var(actionName)@@',

icon : 'fas fa-lg fa-random comp-data',

dataScheme: [],

dataPickObject: true,

properties : [

{

group: 'Properties',

variables: [

{

name: 'actionName',

optionName: 'name',

title: 'Name',

type: 'text',

required: true,

defaultValue: ''

},

{

name: 'csv',

optionName: 'csv',

title: 'CSV',

type: 'text',

required: true,

serverDataBindings: true,

defaultValue: ''

},

{

name: 'database',

optionName: 'database',

title: 'Database',

type: 'text',

required: true,

serverDataBindings: true,

defaultValue: ''

},

{

name: 'actionOutput',

optionName: 'output',

title: 'Output',

type: 'boolean',

defaultValue: false

}

]

}

]

}

@patrick, thank you so much!

You helped me a lot! When I read your code, it becomes clear how it works. It’s just that I can’t program and it’s hard for me to understand the syntax, so forgetting curly braces is a common thing for me.

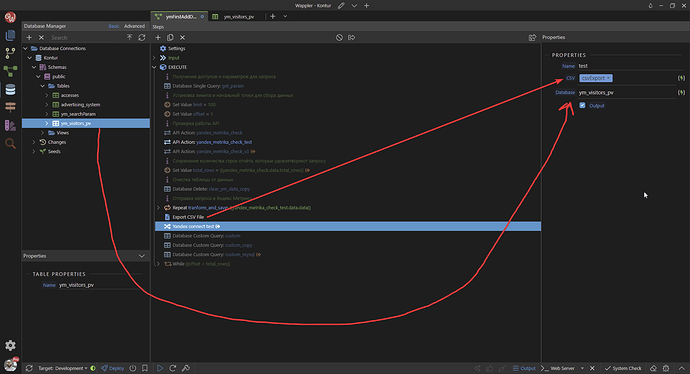

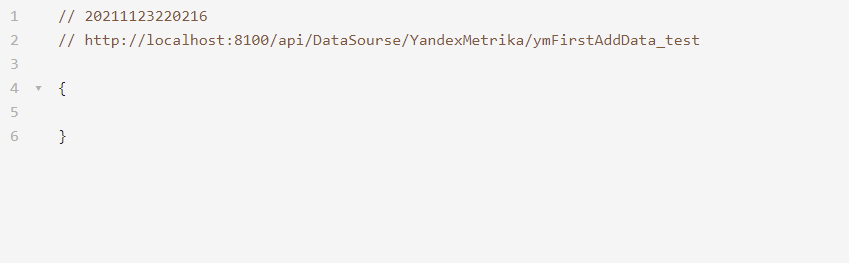

Installed the module. Received an empty answer. The database is empty.

I realized that I only indicate the name of the table, but it is not clear where to specify the requisites for connecting to the database.

Do I understand correctly that I need to add the following code to the “dataimport.js” file?

const pool = new Pool({

user: 'dbuser',

host: 'database.server.com',

database: 'mydb',

password: 'secretpassword',

port: 3211,

})

In the end, it will turn out like this.

exports.import_csv = function(options) {

const fs = require('fs')

const { Pool } = require('pg')

const copyFrom = require('pg-copy-streams').from

const pool = new Pool({

user: 'dbuser',

host: 'database.server.com',

database: 'mydb',

password: 'secretpassword',

port: 3211,

})

pool.connect(function(err, client, done) {

var stream = client.query(copyFrom(`COPY ${options.database} FROM STDIN`))

var fileStream = fs.createReadStream(options.csv)

fileStream.on('error', done)

stream.on('error', done)

stream.on('finish', done)

fileStream.pipe(stream)

})

}

Unfortunately, I could not configure the import  . Maybe someone knows an alternative solution?

. Maybe someone knows an alternative solution?

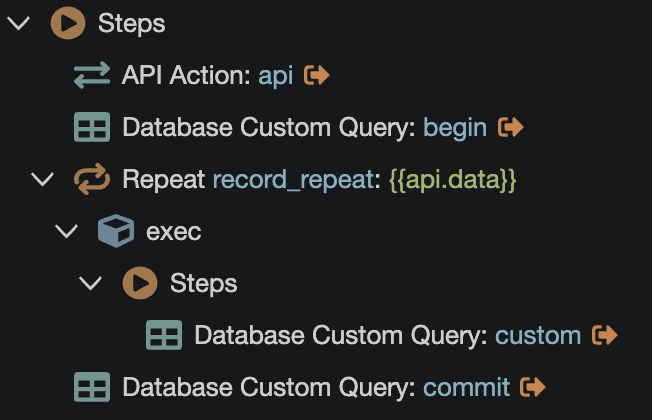

Just wanted to throw in a little advice, I found using transactions can speed things a bit:

BEGIN

INSERT INTO (...)

COMMIT

My particular data went from 13 seconds to 6, practically half (edit: on MariaDB)

Actually, I’ve just realized how slow is the Database Multi Insert in Wappler, even with transactions as mentioned in my previous post.

I did the same test using a third-party SQL viewer, with the import feature, and it basically transforms the CSV into a bulk INSERT statement:

INSERT (...) VALUES (...), (...), (...), (...), (... about 300)

INSERT (...) VALUES (...), (...), (...), (...), (... about 300)

// instead of Wappler:

INSERT (...) VALUES (...)

INSERT (...) VALUES (...)

INSERT (...) VALUES (...)

The difference is huge, like, HUGE! From about 40 seconds to 5 on Postgres (~11000 rows)

We need a smarter Database Multi Insert! And btw, also allow adding ON UPDATE clause so I don’t have to use a custom query:

INSERT (...) VALUES (...), (...), (... about 300) ON UPDATE SET present_at=:P2

Edit: Cloned post to new feature request topic: Smarter Database Multi Insert

Bulk insert is now available in Wappler: