Intro

Bulk insert actions involve inserting a large amount of data into a database table in a single operation, which is more efficient than individual row inserts. This method improves performance by reducing overhead, minimizing network traffic, and optimizing logging and indexing. Bulk insert actions are particularly useful for CSV imports.

NOTE: Transactions and Bulk Insert are only supported in PHP and NodeJS. They are not available for ASP/ASP.NET

In this tutorial we will show you how to bulk insert data from a CSV file.

Bulk Insert CSV data

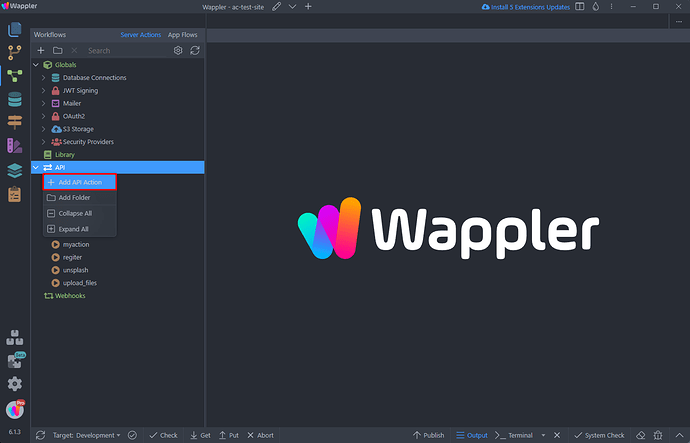

We start by creating a server action in the Server Connect panel:

We add a name for it:

In this example we will show you how to import data from a CSV file, located on our server. Of course you can implement the same functionality for cases where you need CSV upload and insert, or CSV export from one table and then insert in another.

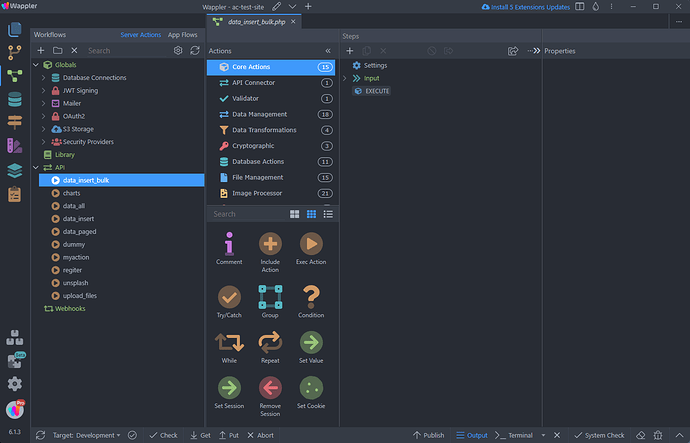

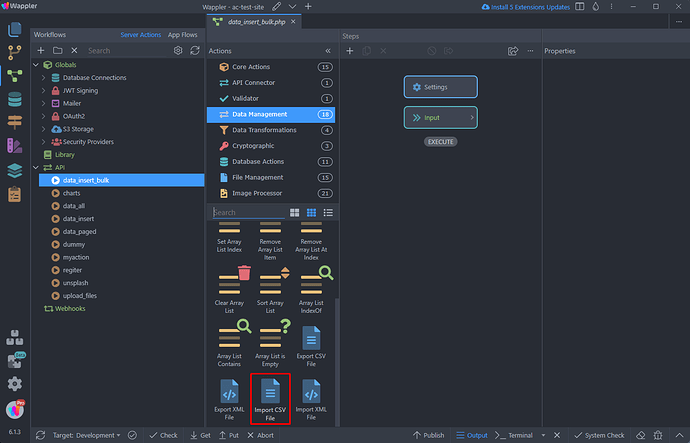

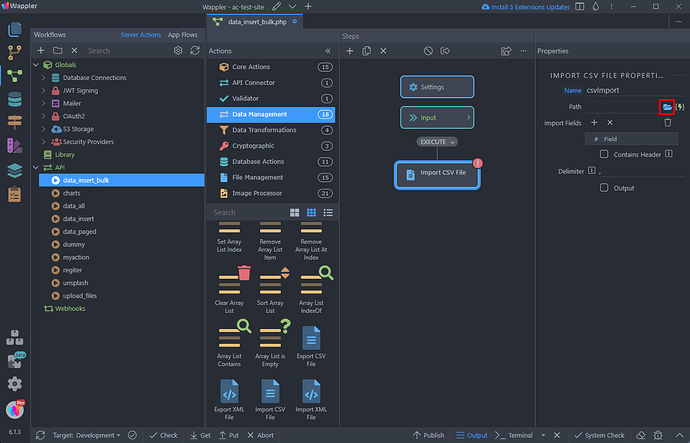

We add a Import CSV File step in the Server Action steps:

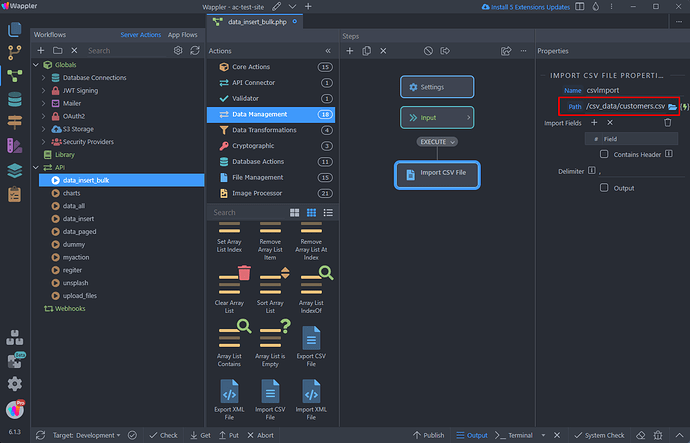

Then we select the CSV path. As we mentioned already - this can be a file on the server or a dynamic path returned from an upload step:

We select a file on our server:

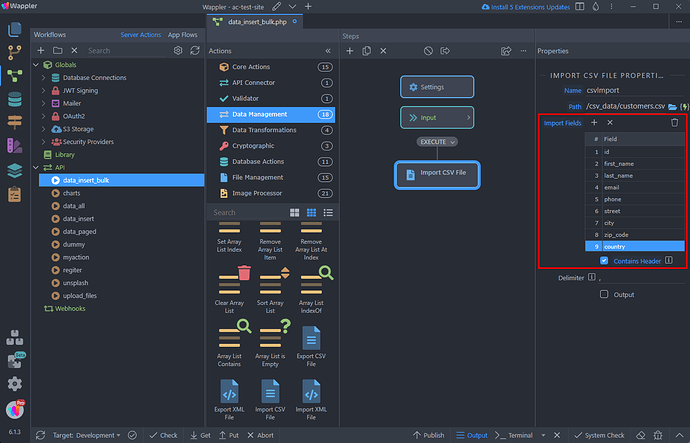

We setup the Import Fields and Header options, so that they match the CSV structure:

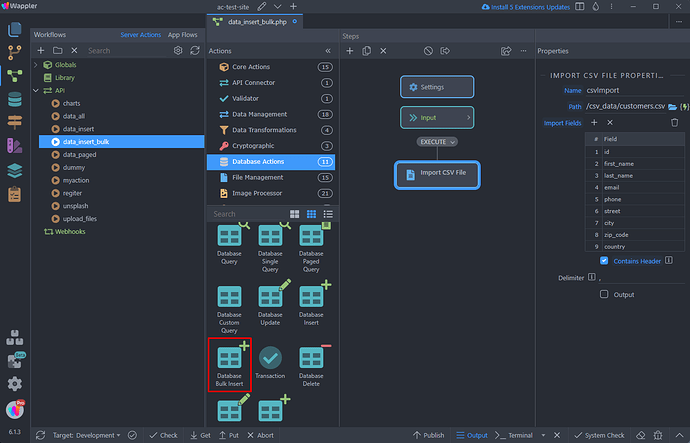

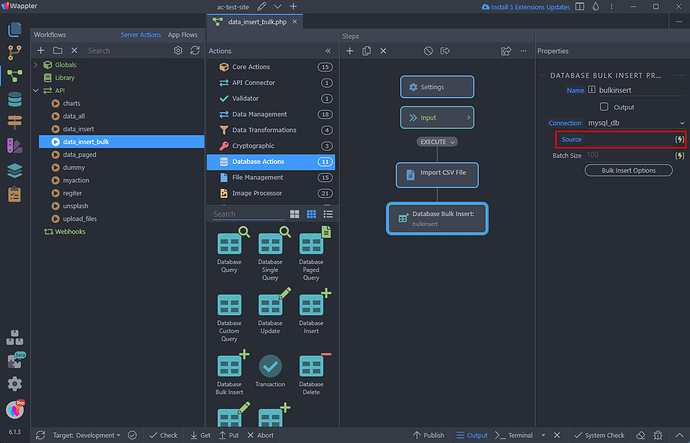

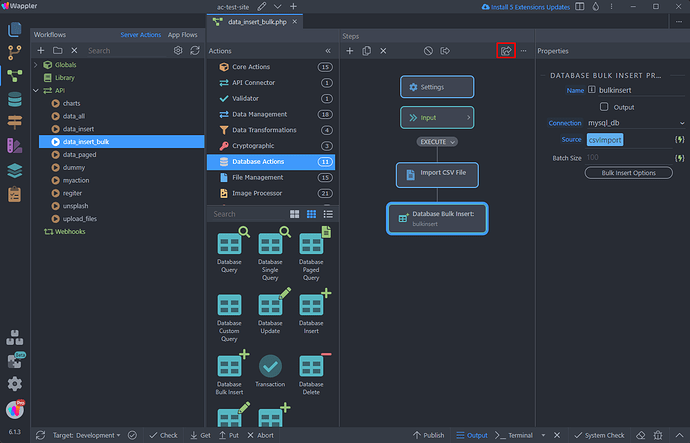

The next step is to add the Bulk Insert action. Open Database Actions and add the Database Bulk Insert:

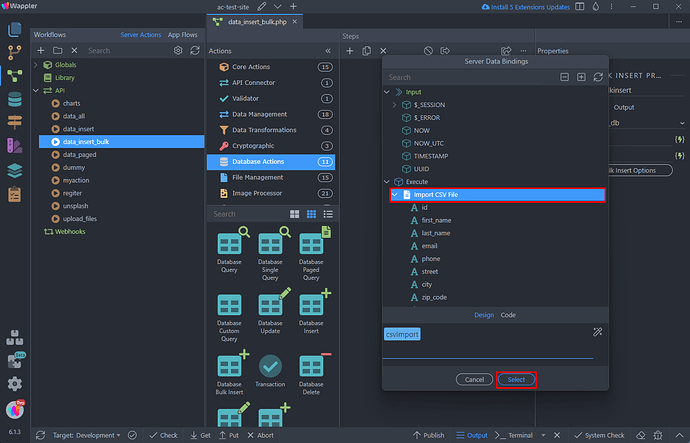

Select the Bulk Insert Source:

In our case this is the CSV Import step:

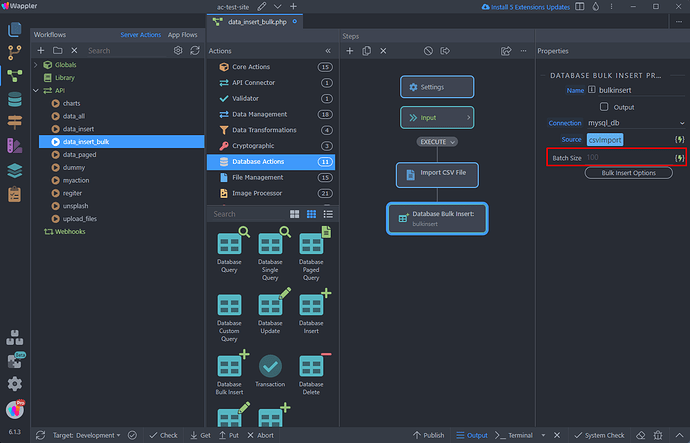

Set the batch size. The batch size refers to the number of records that are inserted into the database in each iteration of the bulk insert operation:

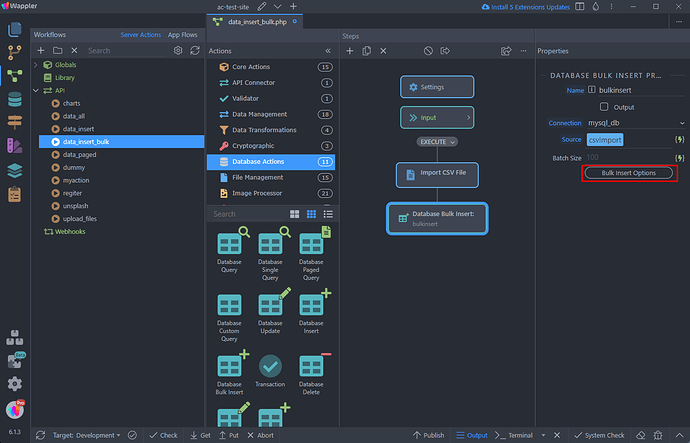

And then set the Bulk Insert Options:

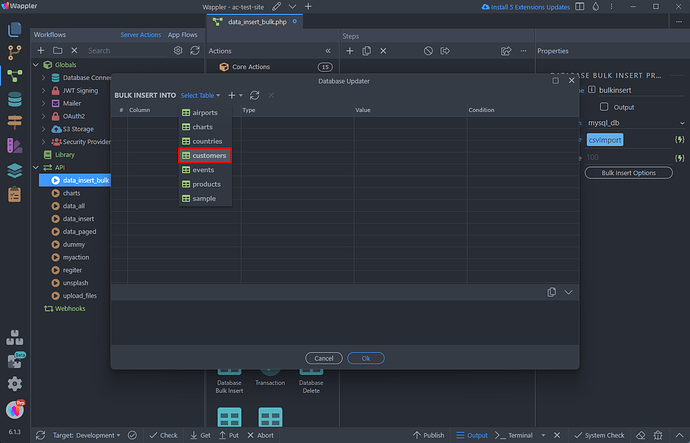

Select the table to insert data into:

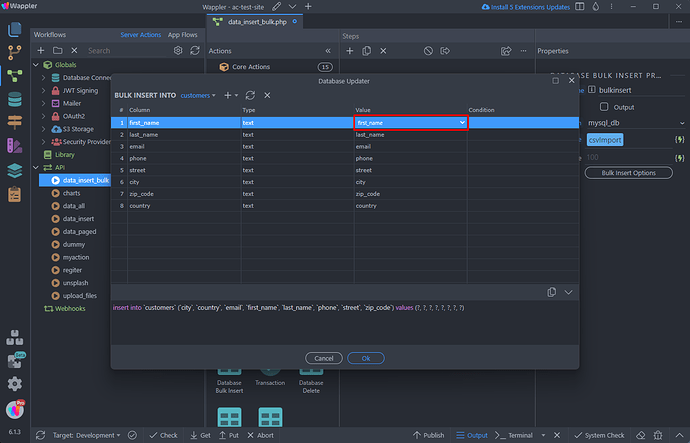

Then select the values for your database table fields:

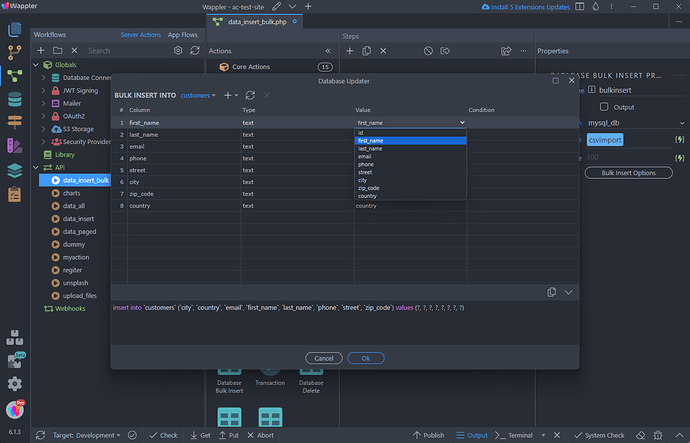

You can choose the values from the dropdown:

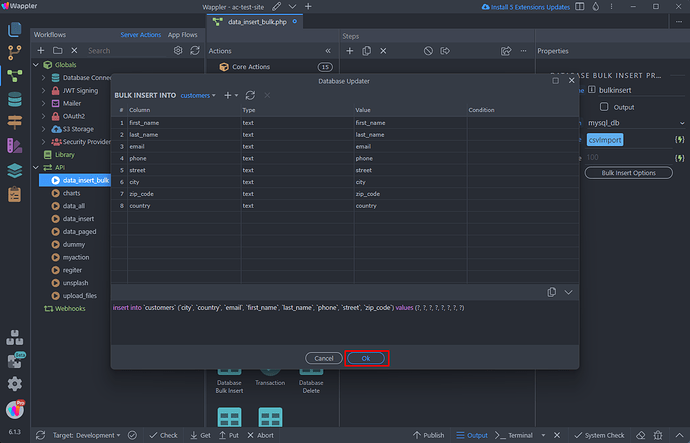

Click OK when you are done:

And we are ready. In this case we can directly run the Server Action in the browser, in order to execute the bulk insert:

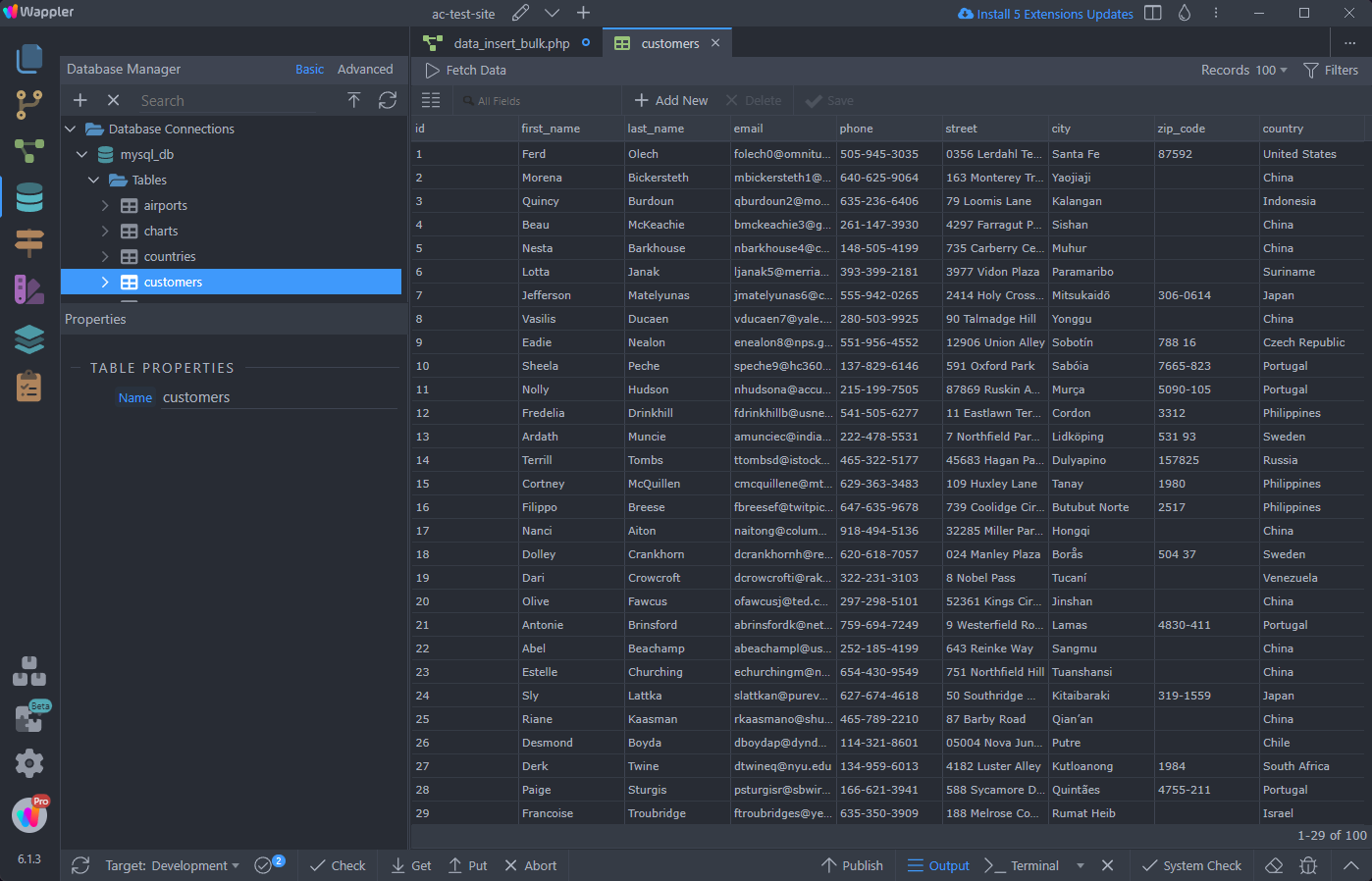

And you can see the data has been imported in the database table:

On Error

In the event that the Bulk Insert action encounters an issue and fails before completion, any changes made by the operation within the database will be automatically reverted.

Benchmark

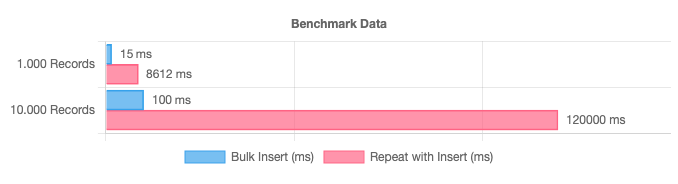

We compared the different data insert methods - Repeat with Insert and Bulk Insert.

Results:

Inserting 1.000 Records in a database

Repeat with Insert: 8612ms

Bulk Insert: 15ms

Inserting 10.000 Records in a database

Repeat with Insert: 120000ms (2 minutes)

Bulk Insert: 100ms