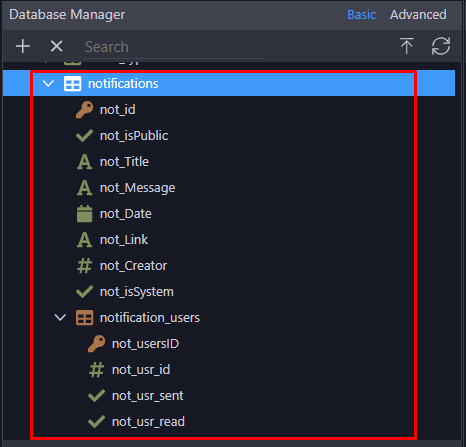

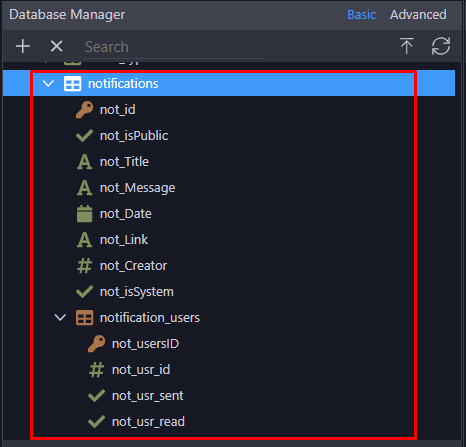

I have a table Notifications with some columns and a subtable that is updated if a Notification is SENT to the user(not_usr_sent==1) and if is READ by the user(not_usr_read==1).

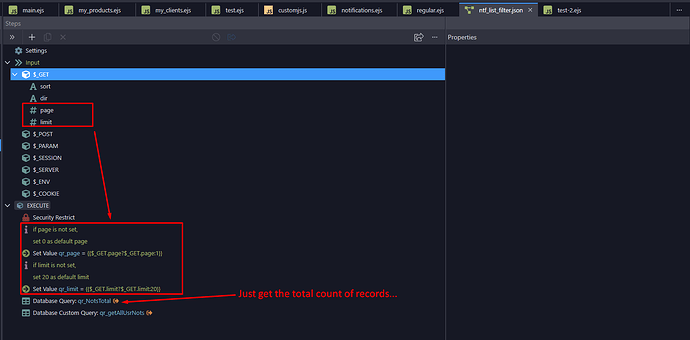

So, first I created the serveraction that reads the notifications.

We need 2 $_GET variables:

page: holds the current page of results

limit: defines the amount of records returned on each call

We define 2 setValue for those two variables and set their default values

We have a query just for getting the total count of records

And finally, we added a custom query that pulls the current (page) of (limit) records

Let's look at it...

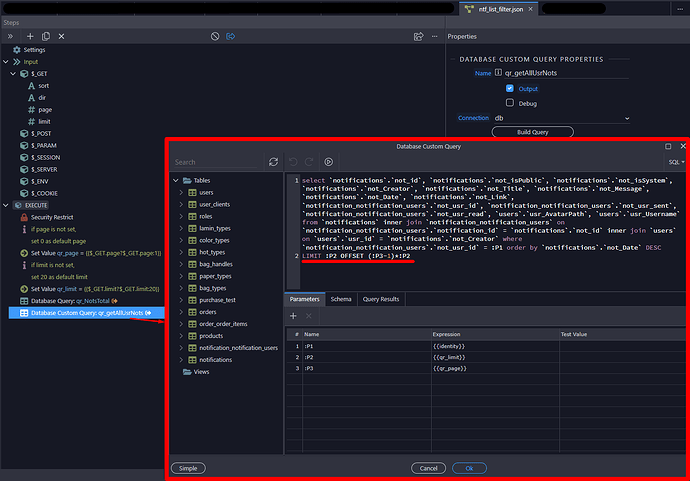

A basic select with the addition of LIMIT :P2 OFFSET (:P3-1)*:P2

Nothing special...

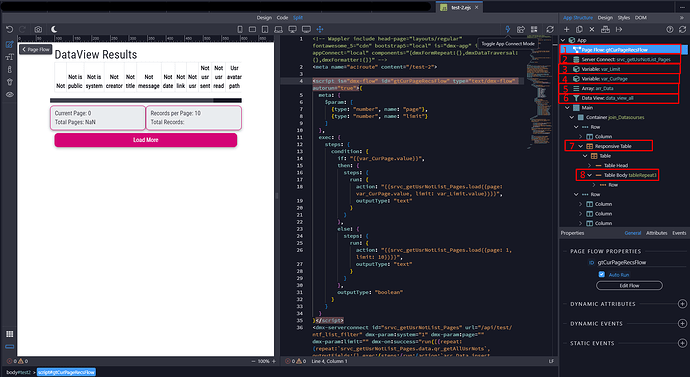

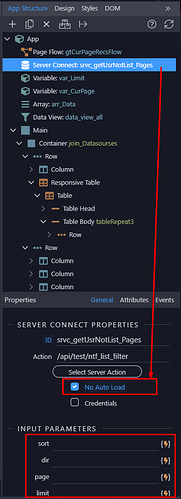

Now in our page.

- PageFlow that calls the serverconnect

- Our serverconnect that we created before

- variable var_limit that holds the LIMIT for our serverconnect, value=10 for our case.

- variable var_CurPage that holds the current page for our serverconnect, value=1 (first page of results)

- Our assistant Array that the results of each serverconnect call will be added there.

- A DataView. mirror of our Array data (DataSourse=arr_Data.items)

- A table Generator that lists our results.

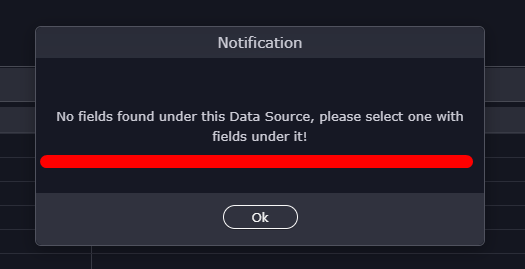

Here a trick is needed because Table generator couldn't accept neither the Array or the dataView:

We populated the ServerConnect since it has exactly the same structure and...

- In our table's body tablerepeat we binded the DataView.

Let's see in detail the above steps.

We have a PageFlow that handles the calls of the serverconnect

It is set to AutoRun

But let me talk about the serverconnect first...

It is set to "No Auto Load" because it will be called only through the PageFlow and its parameters are empty.

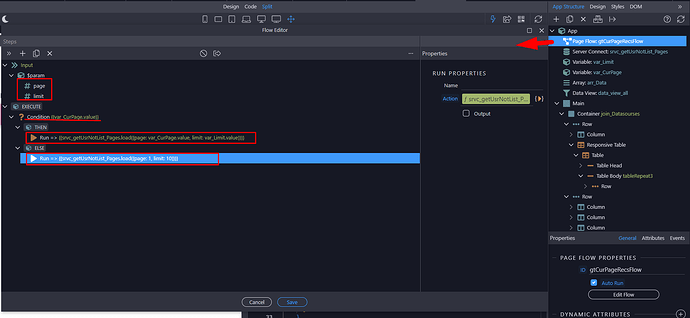

Back to the PageFlow

We define the parameters needed for the limit and offset of our serveraction.

And we add a condition to check if the parameters have value or no.

If they have no value we call the serveraction and pass the default values of page=1 and limit=10

(we could just igmore this step if the default values we have set in our serveraction are fine for our case)

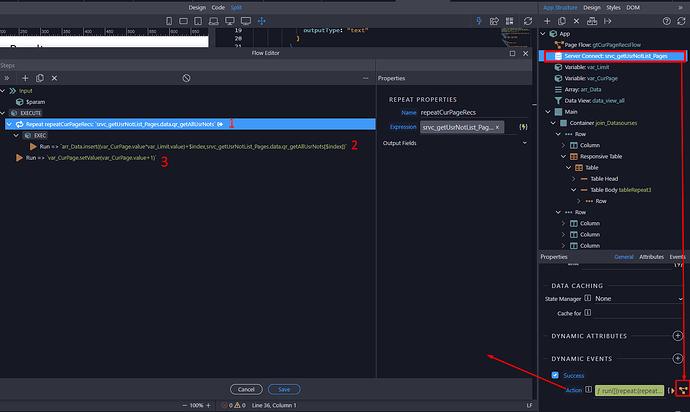

So, the serverconnect is called for the first time and we set some actions on its success event:

On Success event, we set an inline flow and in there:

- we add a repeat step based on the serveraction custom query that returns our results

- on each loop, we insert in the array

-on the current index: (var_CurPage.value*var_Limit.value)+$index

-the current row's value: srvc_getUsrNotList_Pages.data.qr_getAllUsrNots[$index]

- After the repeat (outside) we increase the value of the variable var_CurPage=var_CurPage.value+1

And we are ready...

You can check the result in the short video on my previous post

Please, anyone can try it and give a feedback if there is a problem/issue.

Especially if you have a "heavy" dataset to work on so we can see its behavior...

Any suggestions, corrections or ideas are always welcome are welcome

Thanks for reading