Hi all,

I followed the steps on setting up and deploying Docker to Digital Ocean - https://docs.wappler.io/t/docker-part-4-deploy-in-seconds-to-the-cloud-with-docker-machines/14373

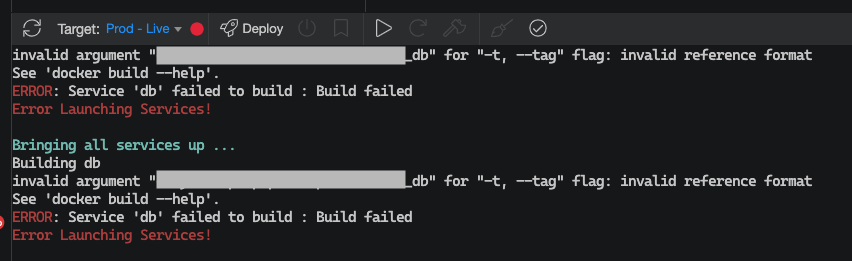

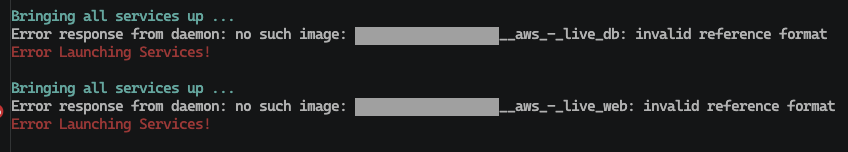

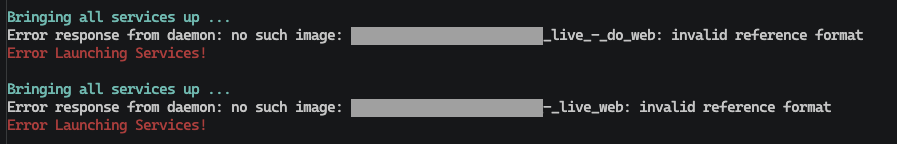

But when attempting to deploy to the remote live server (Digital Ocean), I get:

ERROR: Service ‘db’ failed to build : Build failed

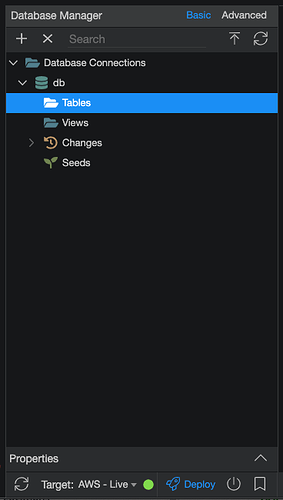

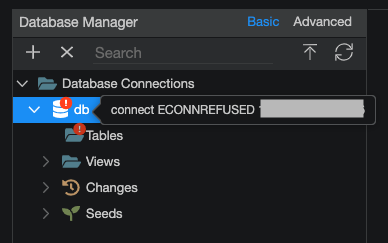

In the Database Connection section, it says ‘connect ECONNREFUSED’.

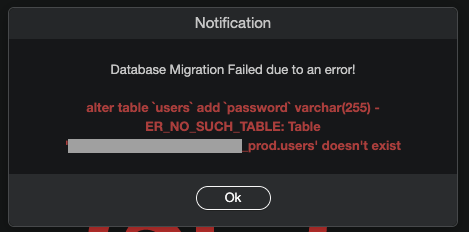

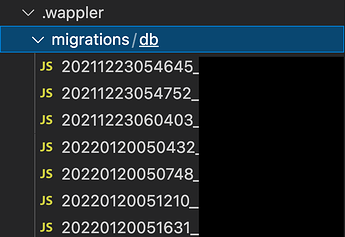

I’m not sure if it’s related to a database issue I experienced at the start of developing my app (Issues deleting placeholder Docker tables) as @Apple said it could result in issues deploying to another target.

Any suggestions on what I should do to fix this issue?