Hi.

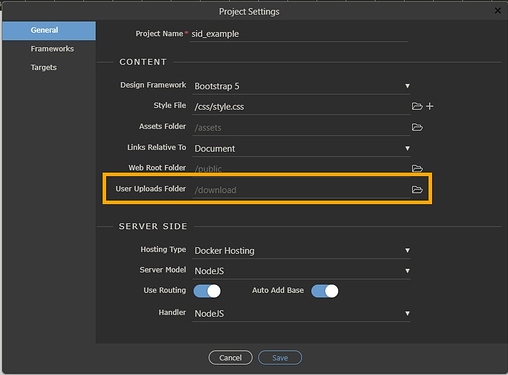

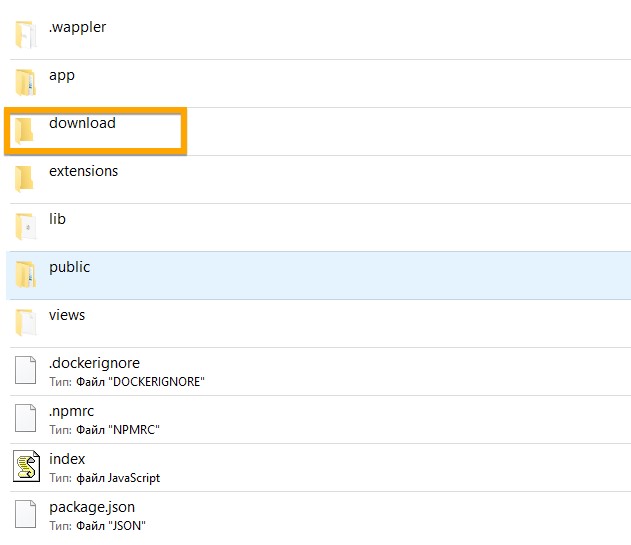

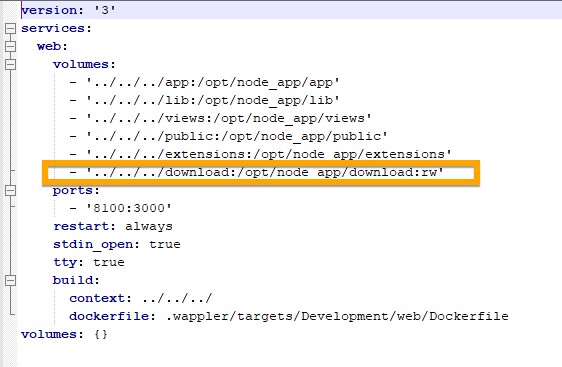

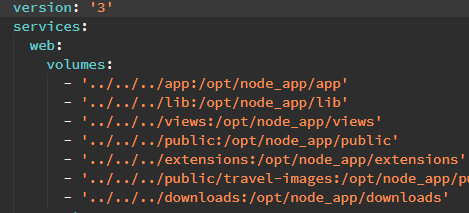

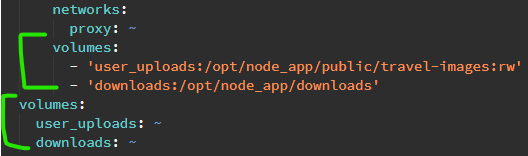

I have a project with NodeJS/Digital Ocean/Docker setup.

In my public user-uploads folder, I have a folder “downloads”, which contains files/zips that are generated on demand and can be downloaded by a logged in user.

But, the issues is that these files are not really secure.

Even if I use the server side file download mechanism, the actual files are still exposed.

Anyone who can guess the path of said files, can download them.

I have handled this before using htacess file, but it does not seem to be working here.

This is also the method suggested in the community in a few old posts.

I also tried creating a custom route, but the actual file path gets resolved before my custom route, hence file gets downloaded. Although this is not really a solution since security restrict & DB etc are not directly available in custom routes, but was just trying to see it it works.

Can anyone please help me figure out how to secure these files from direct access?