Hello,

I am trying to use the S3 Connector but encountering some issues. I was hoping someone could help illuminate what certain fields are for. For context, I am using Auth0 to have users log into my site. Users should then be able to upload files and send them to an S3 bucket.

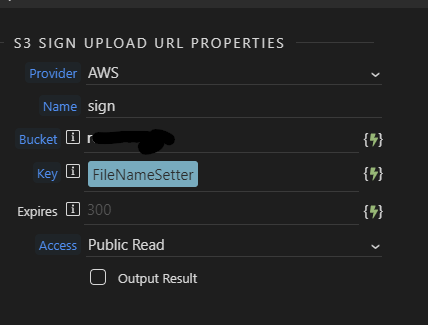

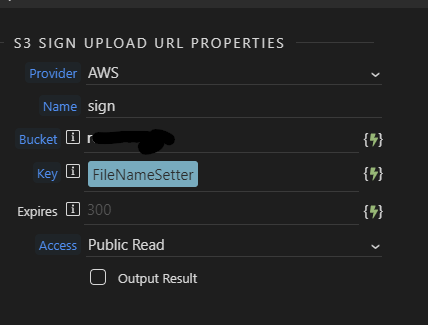

I followed the instructions here, but could someone elaborate on the different fields in the Sign Upload URL and Set Value steps?

S3 Steps

The Provider should be connected to an S3 Storage that you’ve already setup. This is in my Workflow area:

You’ll see that the S3 Storage has “AWS” below it - and that’s what my Provider is assigned to.

The Name is set to “sign” for me - I’m not 100% sure about this but I think it’s the name that your uploaded has while uploading.

The Bucket is the name of the bucket from your S3 provider. For instance, if you wanted to use Amazon Web Services (AWS), you would login and create a bucket called “MyBucket” and then you should name your “Bucket” here the same thing --> “MyBucket”

Key is what your file will be named after the upload.

So, I have mine called “FileNameSetter” because I grab the name of the upload from the $_GET and add a random hash to it. Why? Just so users can’t randomly guess the filename later.

Expires is the time, in seconds, when the link will expire.

Access is either Public Read or Private. This value, I think, is passed to the S3 provider.

Is that what you wanted to know?

Yeah thank you for the explanation.

I have a related question too. I have everything set up as I would expect it needs to be. But when I add a file and click on the Upload button on the S3 Upload component on the Frontend, I see in the server logs that It goes to call this API I created (GetUserCredentials), the first two steps it takes are -

- Execute Action Step Provider - this calls my Auth0 OAuth2 config which is set up correctly and working when I make external API calls

- Execute Action Step Authorize - after this step I don’t see any of the actions I actually have added to this API (GetUserCredentials)

After these two steps it fails with a CORS error. I am wondering if it is supposed to go through these two steps first, and if so why does it not follow through with the redirect. The error I see is

Access to XMLHttpRequest at ‘https://dev-rmk80jpl.us.auth0.com/authorize...Access-Control-Allow-Origin=*&Content-Type=application%2Fzip’ (redirected from ‘http://localhost:3000/api/GetUserCredentials?name=Inventory.zip’) from origin ‘http://localhost:3000’ has been blocked by CORS policy: No ‘Access-Control-Allow-Origin’ header is present on the requested resource.

Are you using your own computer to host this?

Because localhost:3000 is stuff on your own computer. Are you sure you’re actually reaching out to the web?

Also, if it’s a CORS error - what service are you using? Digital Ocean or AWS? Or what?

I am using localhost during development, and using http://orbits.cap.orbits-ongology.xyz/ as a staging site, in both cases I get the same CORS errors.

My stage server is hosted on Caprover

I don’t know if your stuff will work while on localhost or not.

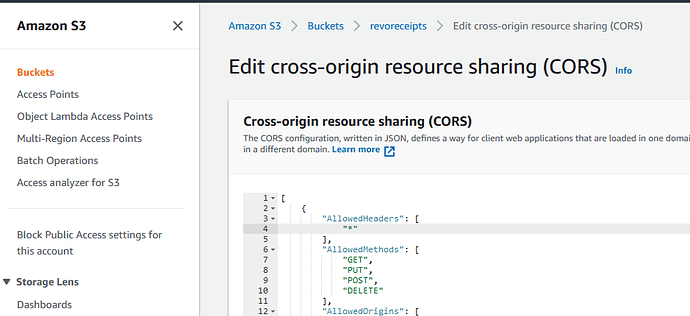

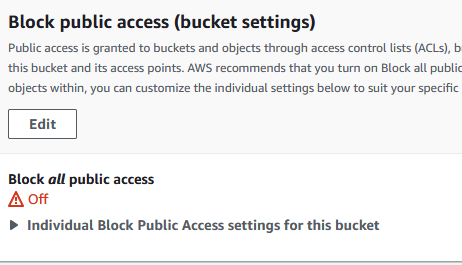

And, I’m not familiar with Caprover at all. But, I know on AWS and other S3 services if you’re getting a CORS error you probably need to define what sites are allowed to upload to your bucket.

So, go into Caprover and find where you can edit your CORS data. Try putting something like this in:

[

{

“AllowedHeaders”: [

“*”

],

“AllowedMethods”: [

“GET”,

“PUT”,

“POST”,

“DELETE”

],

“AllowedOrigins”: [

“https://yourdomain.com”,

“http://yourdomain.com”

],

“ExposeHeaders”:

}

]

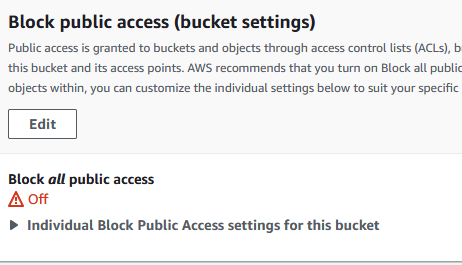

You also want to make sure that your security/permissions allows for uploading from a 3rd party site.

So I’ve checked the two suggestions you made, and they were already set up this way.

I’ve attempted to try to forgo using the Sign Upload and use a Form to Upload a file, then call the S3 Provider, and use an S3 PUT File step.But after the S3 Provider step, again I see the API causing an uncredentialed redirect to auth0 which rejects the request.

The URLs have all been tested directly and they work as expected, but this part of the s3 requests giving a CORS issue.

I guess my question is: has anyone used s3 with auth0 (specifically) or any other OAuth provider?

Hey, I could really use some help on this new issue I’ve been encountering. So I saw a post somewhere that suggested that since the Server Connect API call is being sent through ajax that could be causing the CORS error I was experiencing earlier in this post. That post suggested following the steps here to work around this. https://docs.wappler.io/t/using-oauth2-connector-with-facebook/12345#Setting-up-the-login-page. You can see my setup below

https://imgur.com/a/uXCcs56

However, I am unable to verify if this is working because when I click the link to call my Server Connect API call, which is a POST, I do not know how to get the form data to the Routed API call. The resulting page is just telling me my two POST params (filesField and projectName) are required, even though I do include them before clicking the link.

I have my API $_POST variables connected to the form I am using, but it seems like the API call happens before the submit happens, which makes it so my POST params are missing from the call.