I store a JSON object in one of my database table columns

something like this

{

"country":"Bosnia and Herzegovina",

"city":["Zenica", "Sarajevo", "Tuzla", "Mostar", "Banja Luka"],

"faculty":["Faculty of Mechanical Engineering in Zenica", "Faculty of Electrical Engineering Sarajevo", "Faculty of Electrical Engineering Tuzla"],

"team": ["Čelik", "Željezničar", "Sarajevo", "Sloboda", "Velež", "Zrinjski", "Borac"]

}

I would like to insert each city name into another table, with one name per row but because some of the arrays contain 1 item and others contain 300 items, and there is a lot of data i need to find a solution that is pretty fast.

Here is what i have done so far

INSERT INTO rh_r_name (rh_r_id_rhr, city_name)

SELECT

CONCAT('seq_0_to_', JSON_LENGTH(rhddrd_json, '$.city') AS alc,

JSON_UNQUOTE(JSON_EXTRACT(rhddrd_json, '$.id')),

JSON_UNQUOTE(JSON_EXTRACT(rhddrd_json, CONCAT('$.city[', seq_0_to_3.seq, ']'))) AS single_cities

FROM rh_dump_data_rd

JOIN seq_0_to_3

HAVING single_cities IS NOT NULL;

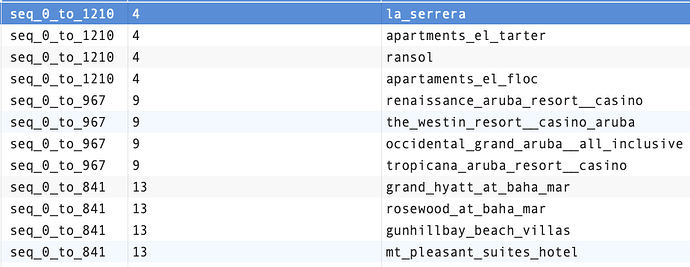

This produces

This currently works and is quite fast, however if i change seq_0_to_3 to seq_0_to_300 then I loose all the speed and it becomes very slow. Consider maybe only one array out of the 260k i have to run through may have 300, and they average say 5 items in an array, it seems like a waste to set the upper limit of the sequence to 300.

Is there a way to rewrite the above sql so seq_0_to_3 is dynamic rather and gets the int from column alias alc