Putting this in the Coffee Lounge as it is un related to Wappler, suffice to say, if you are having issues with scripts, and thinking it might be a Wappler issue, after reading this you might consider testing the same thing on a different server before spending 2 weeks like me thinking your script is wrong.

For the last 2 weeks I have been in a battle with APIs, namely the Google Gmail API and OAuth, half the issue I have been having is getting the script to run even after the user has closed their browser window or left it open for that matter.

No matter how I tried I could not get the script to run from start to finish, considering the script could run for up to 4 hours or even more depending upon how much mail the user has in their gmail mail account.

I have altered timeouts in config files, and changed half my WHM / cPanel settings around and spent countless hours researching and testing, adjusting php.ini files, .user.ini, .htaccess files, honestly I think I have tried it all, including changing php versions and database versions.

I decided to rather do some very simple testing and here are some very strange results in my opinion, maybe someone here who is a little more server administrator savvy could advise.

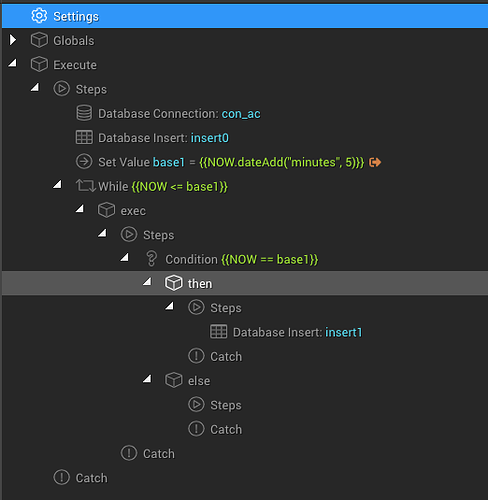

Using the same server I have been fighting the API on I setup a very simple server action in Wappler

Steps

Settings : Script Timeout=7200

Database Connection

Database Insert to insert a single record with a value of 0 on script start

Set Value : Name=base1 : Value={{NOW.dateAdd("minutes", 5)}}

While : Condition={{NOW <= base1}}

While Steps : Condition={{NOW == base1}}

Condition Steps : Database Insert to insert a single value of 1

Here is a screenshot of the test described above

All the script does is insert a value of 0 into a database row and after 5 minutes it inserts another database row with a value of 1

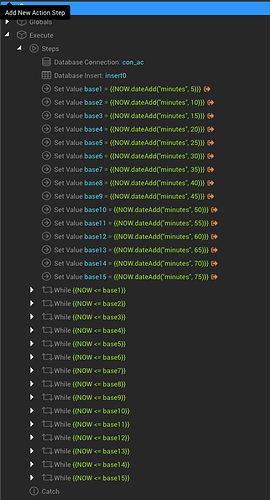

For my real world test, I duplicated the steps 15 times so I land up with something like this

My expected result should produce 16 database row inserts with values from 0-15 spanning a 75 minute period, and should give the same results regardless of if the user keeps the browser window open or if they close it, if they do keep the window open it does give an error after exactly 15 minutes.

The Database table has 3 columns ID, Loop Number, Date Added, the ID is a normal auto incrementing ID, the Loop Number is where I am inserting my value of 0 through 15 depending on which step runs, and the Date Added field is an ON UPDATE CURRENT TIMESTAMP so I can see that the times are exactly what I expected.

Actual Results

On first run it ran for 35 minutes (8 database entries)

On second run it ran for 30 minutes (7 database entries)

On third run it ran for 55 minutes (12 database entries)

On fourth run it ran for 65 minutes (14 database entries)

Obviously even though the results never ran the entire script even once, the stranger thing was that with no alteration to the script or browser used or any other environment variable, each test produced a different result, which led me to believe there is something terribly wrong with my dedicated server or ISP.

I assume considering the test does not rely on my machine, nor browser, nor internet connection after it has been initialised, and considering it does not rely on outside sources like a possibly expiring API Token, that this script should really be a fairly simple thing for my server to run, and if it failed, it should have at least produced the exact same results on each test.

Just a note, because the server is faster at writing a database record than 1 second, most of the database inserts with a value of say 1 or 2 or 3 etc. inserted anywhere from 30 to 300 duplicate rows, which I also found slightly strange, the severe disparity of what the MySQL server is capable of writing in a single second, I would have expected a slight difference based on server load at that exact second in time, but not a difference of 270 rows.

At this point I decided to replicate this entire test procedure on a second dedicated server I own with an entirely different ISP in an entirely different country. Considering my server producing the strange results are in a datacenter in the wonderful third world Africa, where electricity is unstable and telecommunications even more so, although to be honest I feel that even if internet connectivity dropped at the ISP level it should not have impacted this test as it was self contained after initialisation.

Note: The African and American servers are on the same versions of MySQL, PHP, WHM and cPanel, centos 7.7 v86.0.18

Anyway the second test server is with InMotion Hosting in Washington DC and I figured it was worth a try. I copied the same script and created the same database test table, and did not adjust a single server server setting, nor add any of my php.ini, .htaccess, .user.ini modifications at all.

Ran the test file, and it ran from start to finish for the full 75 minutes, i re-ran the test 5 times and each time it ran the entire time frame without error.

The only strange thing I noted even on the new server which is running the full script is the database inserted rows per second disparity, this server writes between 1 and 260 rows per second, considering the server spec is far higher with 48 x 2.3ghz processors vs. the African 6 x 2.8ghz processor bad server which can’t even complete a script, I would have expected more rows per second than the lower spec server.

So I suppose my question at the end is should I just dump this African server and get another American one or could this be due to some server misconfiguration that is possibly fixable, and has anyone else noted such odd behaviour on a server before.