@patrick

Similar to what you did here:

I’m posting an array of inputs and it is failing consistently on the 22 item…every time, regardless of the content.

This is the relevant loop. It loops through the $_POST.text_step array, and inserts the value:

[Screen Shot 2021-07-15 at 3.52.52 PM]

The error that triggers on the 22nd insert is:

code: "ER_BAD_FIELD_ERROR"

message: "insert into `recipe_steps` (`recipe_id`, `step_order`, `step_text`, `tenant_id`) values (9011, 22, {\"21\":\"Fudgy Sweet Potato Brownie\"}, 61) - ER_BAD_FIELD_E…

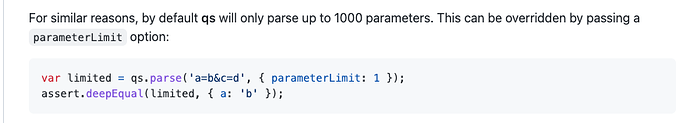

Can you extend the parameter limit as well, or make one/both of these configurable?

From upload.js:

req.body = qs.parse(encoded, {

arrayLimit: 100,

parameterLimit: 10000

});

I've tested this and it fixes a large form post a client has, so I know this works.

From the repository:

@patrick Thoughts on this request?

Do you really pass more then 1000 parameters?

Yep, I really do. Ultimately, I would like to redesign the whole thing and just send a json object back to the server, but yes today there are over 1,000 hidden inputs for large recipes (Each recipe has dozens of ingredients, and each ingredient has 40 nutritional values that are returned from an api, plus other input values.)

@patrick I was using the Form Data component and ran into this issue again.

This fixes for the time being:

In upload.js, adding parameterLimit...

req.body = qs.parse(encoded, {

arrayLimit: 1000,

parameterLimit: 10000

});

Is this something I can add to a config file so I'm not updating the .js file?

Will update the limits to 10000, that should be enough for everyone and otherwise you really have to rethink about the data you are submitting.