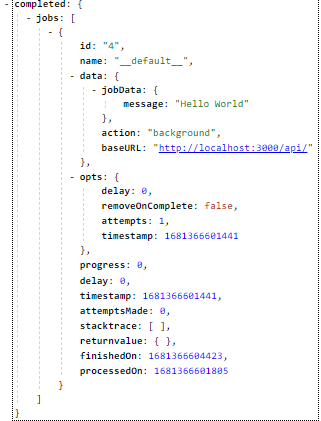

You can either check Redis directly with any Redis GUI or use something like Bull Board https://github.com/felixmosh/bull-board

The next extension release will have more logging functionality. So maybe wait for a week

You can either check Redis directly with any Redis GUI or use something like Bull Board https://github.com/felixmosh/bull-board

The next extension release will have more logging functionality. So maybe wait for a week

Thank you Tobias for the information. I am grateful in advance for your work on the new version of the extension. If the new version will be able to log queues, it will help a lot with debugging.

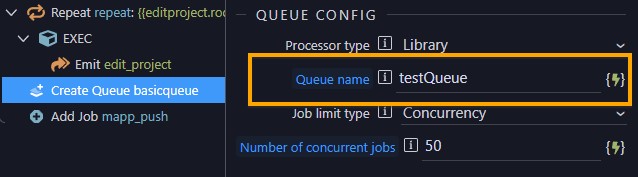

I want to clarify one question. Did I understand correctly from the Redis logs if at the end of each API action I take the step of creating a queue with the same name:

In fact, the queue is created only once, with further attempts this step will not create anything. Is that right?

The creation of a queue is what attaches workers to process anything previously created in the queue. For example, you could execute a series of Add job actions, which would pull jobs in a queue, where they would wait forever. If a queue is created with that name, then the processing of those jobs would begin.

If there is an attempt to create a queue, and the name of that queue and the type of queue (api or library) are the same, then it will not be recreated, but it will make sure workers are attached.

Assuming the queue name is the same, it would not be necessary (or desired) to have the create queue action inside a repeat. Create it once.

@tbvgl shows how to call an api on server start, which is a great place to setup your queues, and to restart them should jobs be in the queue before the restart.

The extension has been updated to include logging which is very helpful in debugging the queue behavior since much of it takes place in backend processes. I can’t find any breaking changes, but PLEASE TEST this version thoroughly as the logging actions were inserted throughout the code.

Special thanks to @tbvgl for contributions of ENV variables for Redis settings, bull log option, and optimization of my rudimentary use of javascript!

Three types of logging are supported with log levels of Error, Warn, Info, Debug. The logging action configures logging globally for all other bull queue actions that execute after it.

@mebeingken I’m on a Windows server, the only way I can run Redis is via Remote Docker. Will this excellent extension work with Remote Docker…? I’m guessing it will but need to check before committing any time to it.

I think with the addition by @tbvgl of environment variables to specify the Redis connection, it can be anywhere

It works with remote docker. You can use ENV variables for the connection or the connection string in server connect. I’ve added parsing for credentials like pass and user from Wappler globals, so if you define an external connection inside server connect, then you are good to go. If you use ENV variables, then that will overwrite the global settings.

Thanks @mebeingken . This is very useful for background processes.

I followed the steps however I’m having this error after adding a job and creating a queue:

Error loading process file /opt/node_app/extensions/server_connect/modules/bull_processor.js. Parser.parseValue is not a function

What did I miss?

Hey Kevin,

It looks like you are attempting to do a library file processor. Try instead to setup the queue as an API type and use the Add Job API action.

It has been a while since I have used the library method other than a quick test, but I use the api style all the time.

Let me know how it goes.

It works now using Add Job API

Thanks @mebeingken

Just a quick heads up: I’m refactoring the full extension and rewriting much of the code in preparation for the release of the new major version 2.0.

This is a major version because there will be breaking changes. The release is expected to be in the coming week and has many interesting new features.

So if you want to prepare for version 2.0, then here you go:

Library jobs will be depreciated. So if you currently use library jobs, you must replace them with API jobs before upgrading.

Logging is entirely outsourced to https://community.wappler.io/t/advanced-logger-extension/.

So this extension will be required for v 2.0. This will allow you to configure logging globally instead of on a per-extension basis and allow us to implement a lot of logging into the bull extension.

Isn’t using library jobs better than API? I have not run any performance tests, but API calls would take a few more ms than executing library actions, no?

The API add job action was born from the library action not working 100% of the time. And then people started reporting trouble making the library action work, so we feel it is best to deprecate it.

If somebody wants to refactor that action so it works all the time, I’m happy to bring it back.

Also just a heads up, we will be bringing more extensibility improvements in Wappler this week.

Server Connect extensions can then be also packaged and published to NPM (or directly from github) and automatically installed in Wappler. So no longer there will be a need for manual file copy.

I got the library actions to work, but there is no upside to using them for bull jobs. The only speed difference was due toAxioss calling the app URL, so DNS needed to be resolved.

Wappler has a bunch of middleware processing requests. These are helpful, so API routes are better than library actions for our use case.

I’m modifying the processor to use localhost if in a docker environment. That will get rid of the delay in resolving DNS and guarantees the job runs on the current node if you run a swarm or kubernetes.

I did think of that, but did not have enough time to test it out on the said project. Definitely better to have.

I don’t use Wappler’s docker deployment. Are you planning to use some Wappler reference to identify… or an ENV variable?

I’m using an ENV variable so that even non docker users can use this approach if their app supports it.

nvm I got it to work by using the app directly:

const app = new App({ method: `POST`, body: jobData, session: {}, cookies: {}, signedCookies: {}, query: {}, headers: {} });

const actionFile = await fs.readJSON(`app/api/${action}.json`);

await app.define(actionFile, true);

This will allow us to support get requests, sessions etc as well in a later version and we don’t need to worry about localhost anymore

To change from lib to action, just create the action and set params instead of GET or POST?

Yeah, but libs work a little different under the hood and I still don’t see a single good reason to support them for bull jobs.