I was surprised by the question as @sid knows the UI back and forth

There is a Redis tab on SC options.

Damn! I knew about the redis tab, but I just thought it was an on/off toggle.

Never switched it on to realise it asks for a path.

Going to try this out asap! Thanks @JonL

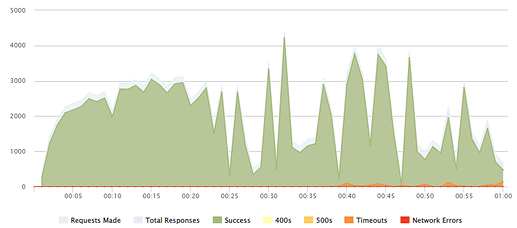

So, here is some benchmarking.

I have used free plan of nice online tool https://loader.io/

I tested one page, it is static and almost empty. Obviously, results may vary on different pages and projects.

All tests last 1 minute.

Also, I didn’t make memos on the way (silly, I know), so maybe I mistaken in details.

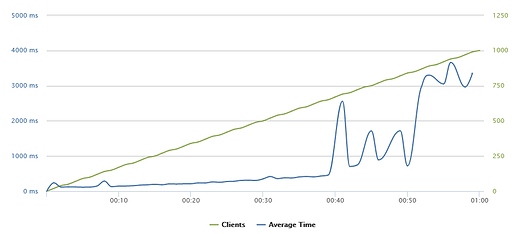

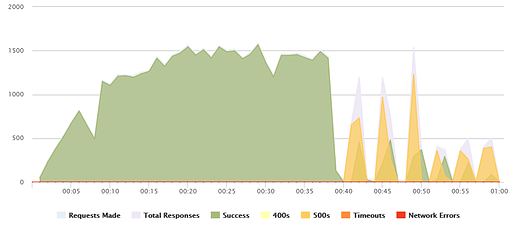

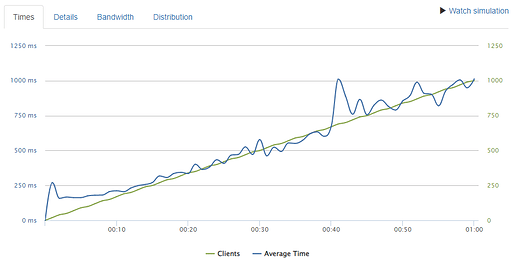

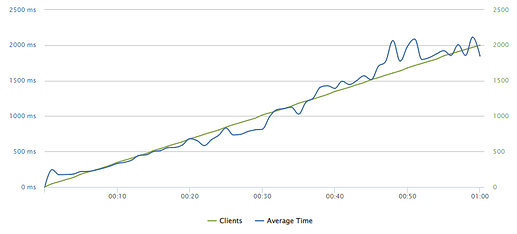

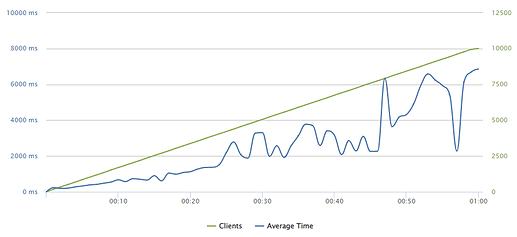

From 0 to 1000 clients.

(1) 6 cpu 6 ram. Plesk. Without any scaling.

As you see it is ok up to 600 clients, but then absolutely fails.

Number of CPUs doesn’t effect much.

Further - only Docker setup.

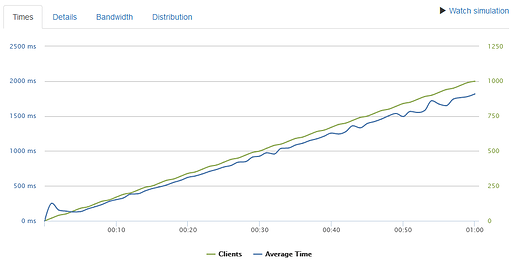

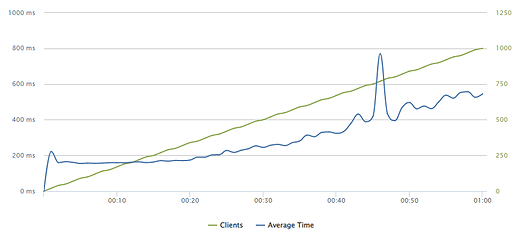

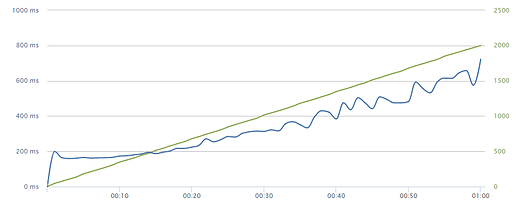

(2) 1 cpu 2 ram. No replica.

No errors, but loading speed not good: 1800 ms at finish.

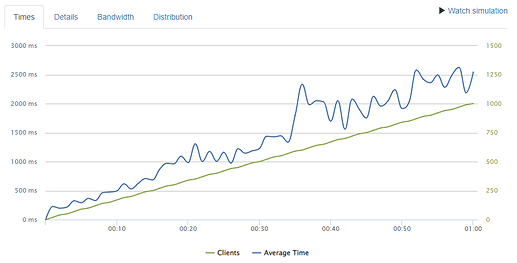

(3) 1 cpu 2 ram. 4 replicas.

Seems like without additional CPU it only make worse.

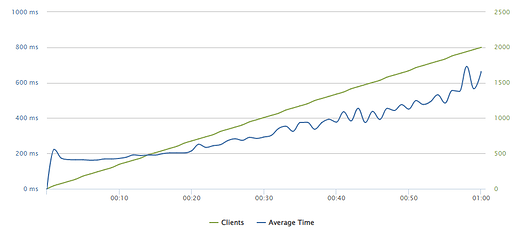

(4) 2 cpu 2 ram. 4 replicas.

Loading speed increased twice. Now it 1000 ms at finish.

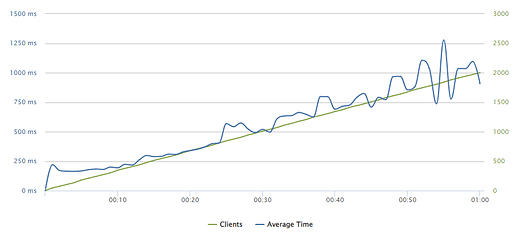

(5) 4 cpu 2 ram. 4 replicas.

Twice faster, 550 ms at 1000 clients

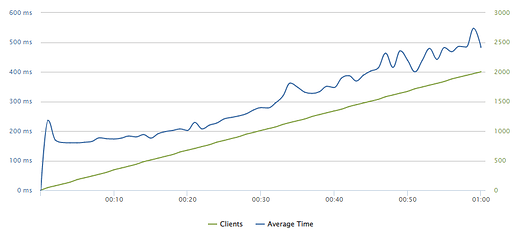

From 0 to 2000 clients.

(6) The same setup, just for 2000 clients.

1000 ms, no errors.

(7) 8 cpu 2 ram. 6 replicas.

600 ms at finish.

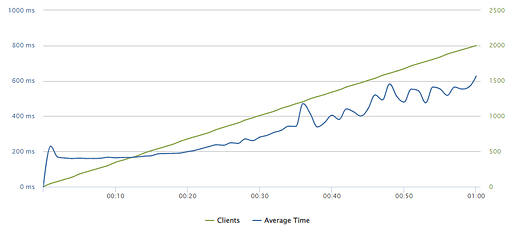

(8) But what if we set 0 replicas?

It shows no errors, but obviously works slower. 2000 ms

(9) And what if we set 16 replicas?

Turns out, it not differs from 6 replicas, as our cpu is still at 8.

(10) 8 cpu 4 ram. 6 replicas.

Almost the same as 2 ram.

(11) 8 cpu 6 ram. 6 replicas.

Look the same.

(12) But how about 0 - 10000 clients?!

It became very slow, but holding.

So, my personal summary about this synthetic testing.

- If you don’t intend to tweak your Plesk, better don’t use it for production. Though with cheap VPS Docker setup will be slower, but at least it lasts longer before total break.

- It doesn’t make sense to increase replicas if you don’t have enough CPUs.

- If you increase CPU and number of replicas, loading speed would increase almost at the same proportion. (at least if we talking about from 1 to 8 cpu)

- Increasing CPUs without additional replicas gives just a little boost.

- In this particular synthetic case increasing RAM doesn’t make an impact.

I understand that it is obvious conclusion for most developers, but it turns out useful for me and it may help someone too.

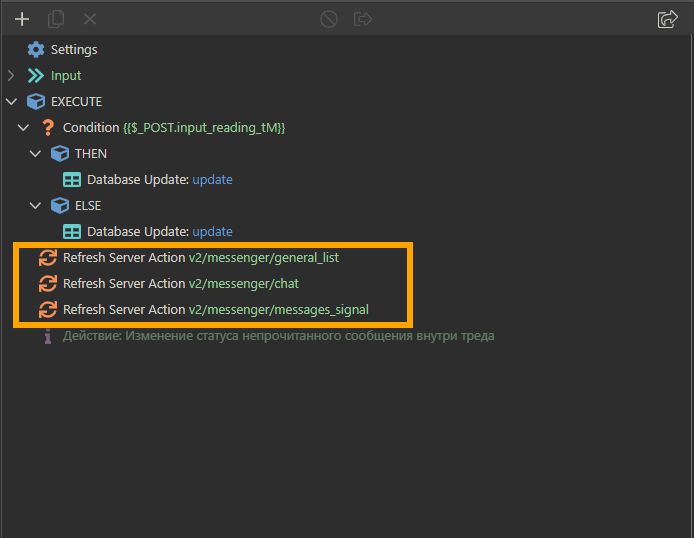

Tested it. Unfortunately, this option does not work if the application actively uses web sockets, for example, messenger. The problem is that two different containers are unaware of each other’s actions.

I will describe it in more detail. Imagine that we have created 2 replicas (container1 and container2). Two users visit the site. Traefik directs one user to container1, directs another user to container2. When user1 writes messages on the server side, sockets will be updated only in container1. Container2 will not know about new messages. Therefore, user2 will not receive them until he manually refreshes the page, or writes messages himself.

Are you using Redis and confirm the issue even with Redis enabled?

Yes, of course. Redis is enabled. But Redis has nothing to do with it. Redis solves the problem of sessions when using multiple containers. I’m describing another problem.

Supposedly Redis would solve that according to Patrick:

If you tests, you will see that Redis does not help solve the sockets problem when using multiple containers.

But I became very interested in the technical moment, which is why the following question arose.

In Wappler projects, in order for sockets to work, it is necessary to refresh specific server actions when changes are made to the database:

Question

How can Redis help container2 to find out that certain server actions were refreshed in container1, given that Redis is just a data storage?

As Patrick mentioned when Redis is enabled, the websockets server uses it to store all the connections there.

So no matter to which container you connect, you get to the global connections list and when a websockets emit is done server side to refresh data, it goes to all connected clients. Again no matter to which instance exactly you have initially connected.

So it should work all fine with multiple instances with Redis.

I’ve done very lots tests and I think I’ve found the problem. If you deploy several different applications with Redis support, then several Redis containers with the same address will be deployed on the server. And it seems to be creating problems. And the problems are not only with sockets. I have observed a lot of oddities in the work of applications. When I removed the extra Redis containers, the work of the applications returned to normal and the sockets began to work stably even with container replication.

The conclusion I made is that if you need to deploy several different applications on the same server with Redis support, you need to deploy only one Redis container.

There are several questions in this regard:

- Will there be data confusion in Redis when storing data from multiple applications?

- Do I understand correctly that if I need to manage data in Redis (for example, clear data), I need to connect to a Redis container using something like https://winscp.net to get into the

'redis-volume:/data'directory? I made sure that even if I delete the container and the Redis image from the server, and then reinstall Redis again, the session data will not disappear anywhere and when I download the application, I will already be verified.

Oh yes but of course the key is to have a single Redis server that stores all the sessions and socket connections centrally.

Then you can have as many node instances to share the real load and scale up your app by just increasing the instances.

And the Redis server is an industry heavy weight memory based database so can handle thousands of connections simultaneously without breaking a sweat.