I saw that, and kind of figured the same however all those posts do not seem to able to get around the issue of getting the table name and column name dynamically, while so far this is actually almost working, got a dynamic table name, got a dynamic column name, but those single quotation marks ' are breaking it

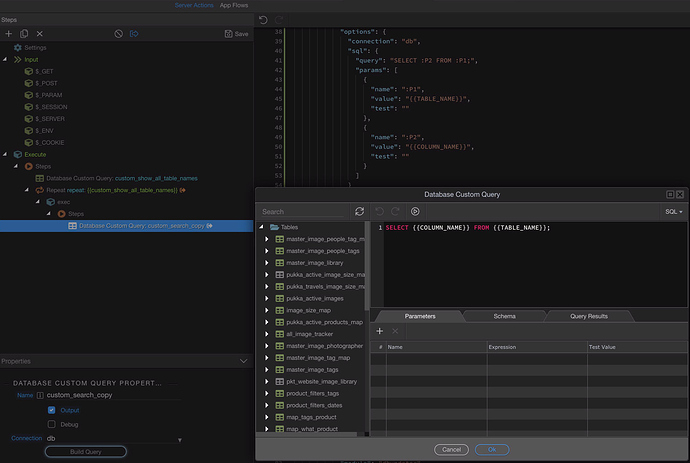

Heres a secret: you can use direct Wappler expression in custom query. Don’t use params. Just bind expression like you would in a set value.

select '{{column_name}}' from '{{table_name}}' ;

WARNING: Do not use this for user submitted value. This type of query is a huge security risk.

I tried that funnily enough and got some really strange result. Give me a sec, I will show some screenshots.

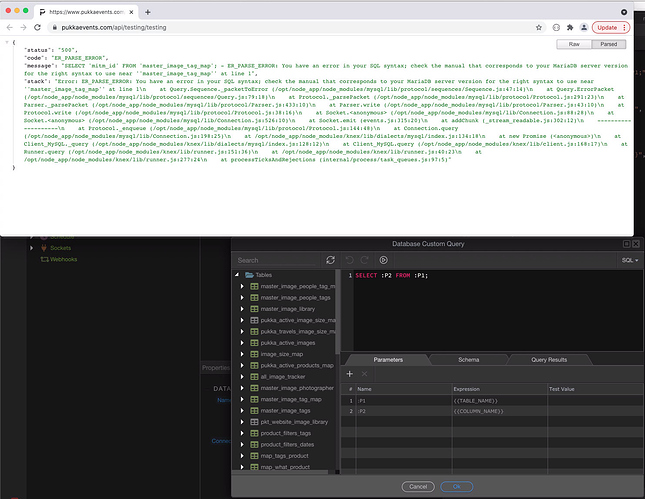

This is when using :P1 and :P2 which correctly get the dynamic values i want, but as strings.

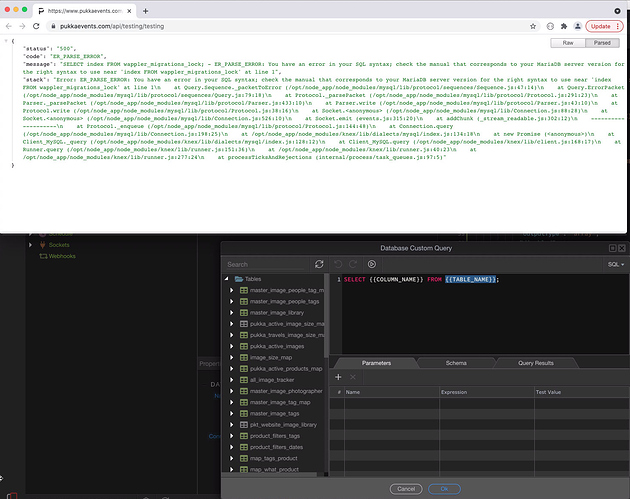

This is when I try it direct with {{COLUMN_NAME}} and {{TABLE_NAME}}

For some strange reason it then pics up the wrong dynamic values

I used something similar a week ago, and it works fine.

Not sure about the single quotes though. I don’t think column and table names even need that.

Maybe try without that.

Well in my case it is the difference from the query being valid and invalid, so i really just want the single quote marks removed in my case.

There was a similar discussion here. I think it can be definitely done using a stored procedure, but @sid’s method looks interesting (though I haven’t been able to get it to work yet). @sid offered alternative suggestions in the other thread too.

There is no need for quotes for putting direct bindings.

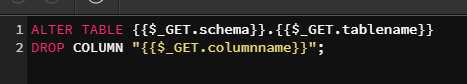

Here’s the example of what I did a or so week ago:

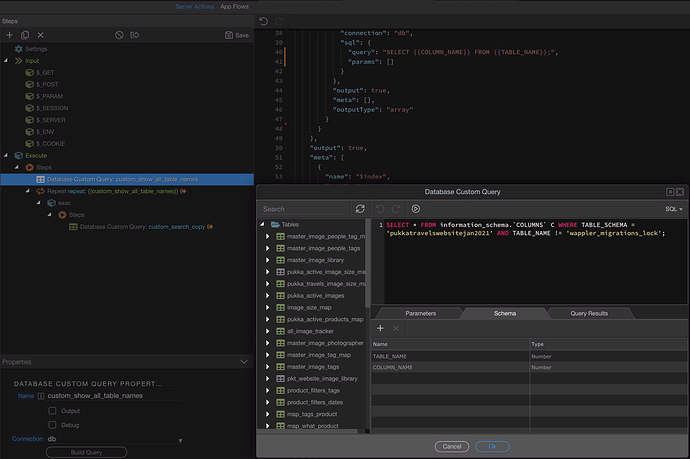

This is Postgre, hence the double quotes. MySQL does not require those.

Thanks @sid and @TomD, I got this working, so Sid you quite correct, I have to use the direct bindings rather than the parameters, because the parameters add the single quote characters and turn everything into strings, the second thing is that MySQL does not like the column name of index sitting inside the wappler_migrations_lock table, so I also have to tell my first query to ignore that table in its search, or I need to use the backtick character arount all the column names to tell the query the word index is not a command but a column name.

Here is the final working script for anyone that may need something like this

Just by the way the reason I am trying to do this is to land up with a similar functionality that phpMyAdmin has where I can search for all instances of something over my entire database.

Paul, make sure to read and acknowledge sid’s advice. Someone could delete the whole database if they inject sql there. It’s very easy.

Thanks, I did take note of that on my side, im not even keeping this live, im making it using it to fix an issue and then removing it.

I know this would be a huge security hole indeed.

I don’t think you would want to create queries using this method for any public-facing site, but it’s a powerful feature. You can add precautions, like only accepting certain table names or rejecting certain strings or characters in queries etc.

I didn’t realise the technique was possible - and am surprised that it is. When I asked @George about this, when the custom query feature was added, he said:

you can’t have tables or column names as parameters.

This is how PDO prepared statements work with their parameters.

It is also saving you from a lot of sql injection attacks.

I wonder if @sid’s ‘secret’ technique was intended to be possible. If not, hopefully it won’t be changed to make it not work. I think it should be an option, as it would be if you were writing code by hand, in PHP for example.

I agree Tom, it’s not something I would use in any form of production as such, but there are instances where it’s easier doing this than a sql dump and using a text editor.

Nice to see it’s actually achievable though.

I have a custom module to handle all this stuff using knex that will sanitize the query before running it.

So you can use this approach in production. You just need to make sure no form of sql injection is possible.

That is very risky. It’s better to use a proven library that handles this for you.

@JonL, would using the built in server connect security providers be enough if it were intended for usage just by the super administrator, so only one person would have credentials to use it. But would that security method be enough to stop attacks on a script like this.

No, it wouldn’t be enough.

You are just putting a gate in front of an open vault. Ideally you want a gate in front of a closed vault.

If you forget to close the gate at some point someone could steal your money.

Was just going to say what a nice discussion here about “if I leave my house key under the doormat, would it be safe enough?”

No, you don’t want to leave the key under the doormat, you want to lock the door and keep the key in your pocket.

You could always put a sign on your door.

“Please be nice.”

Bahaha, I live in South Africa, you would have to swallow the key and even then it could be stolen.

But if someone can get that amount of access (the ‘super administrator’), I would have thought it’s probably likely they could destroy your database anyway.

Super administrator is an invented word in this context. He is just referring to give access to a specific user or group of users. But he could screw up the logic of setting te security provider. He could make a change to a role in the database or a bug with Security Provider could appear.